2 Descriptive statistics

When we have finished this chapter, we should be able to:

2.1 Data

We will explore a dataset containing 258 participants (rows) and 8 variables (columns). The variables include sex (female/male), age (in years), time spent on the internet and social media (in hours), the total score (0-30) from responses to 10 questions on the Rosenberg Self-Esteem Scale (RSES), and the categorization of that score into three levels of self-esteem: low (0-15), medium (16-19), and high (20-30) (García et al. 2019).

2.2 Summarizing categorical data (Frequency Statistics)

2.2.1 One variable frequency tables and plots

The first step in analyzing a categorical variable is to count the occurrences of each label and calculate their frequencies. This collection of frequencies for all possible categories is known as the frequency distribution of the variable. Additionally, we can express these frequencies as proportions of the total number of observations, which are referred to as relative frequencies. If we multiply these proportions by 100, we obtain percentages (%).

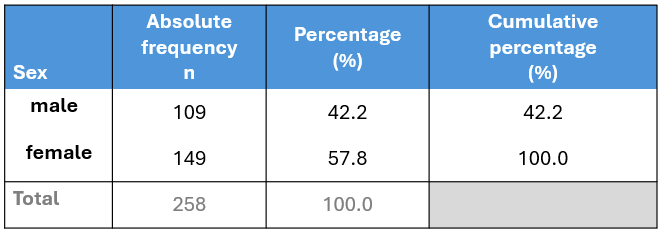

Sex variable

Let’s create a a frequency table for the sex variable:

The table displays the following:

Absolute frequency (n): The number of participants in each category (male: 109, female: 149).

Percentage (%): The proportion of participants in each category relative to the total number of participants (relative frequency) multiplied by 100% (male: 109/258 x 100 = 42.2%, female: 149/258 x 100 = 57.8%). Note that the percentages sum up to 100% (42.2% + 57.8%).

Cumulative percentage (%): The sum of the percentage contributions of all categories up to and including the current one. For example, for the male category, the cumulative percentage is 42.2%. When combining male and female categories, the cumulative percentage is 42.2% + 57.8% = 100%. Therefore, the final cumulative percentage must equal 100%.

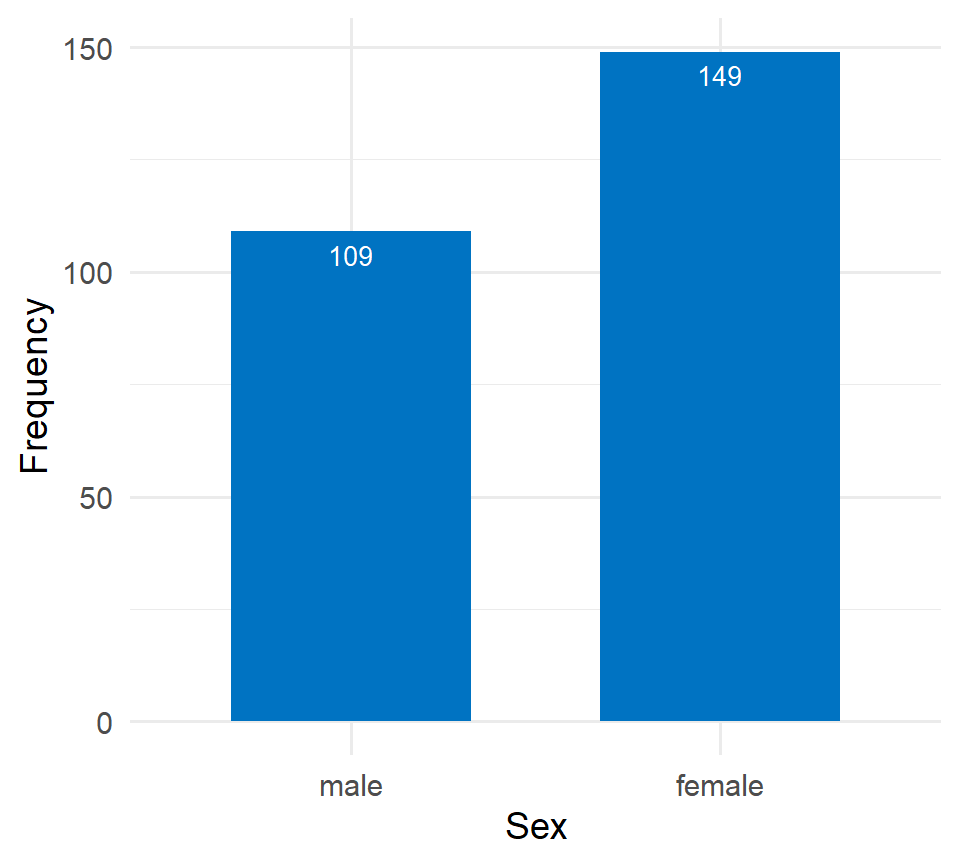

While frequency tables are extremely useful, plotting the data often provides a clearer presentation. For categorical variables, such as sex, it is straightforward to display the number of occurrences in each category using bar plots. The x-axis typically represents the categories of the variable—in this case, “male” and “female”. The y-axis represents the frequency or count of occurrences for each category.

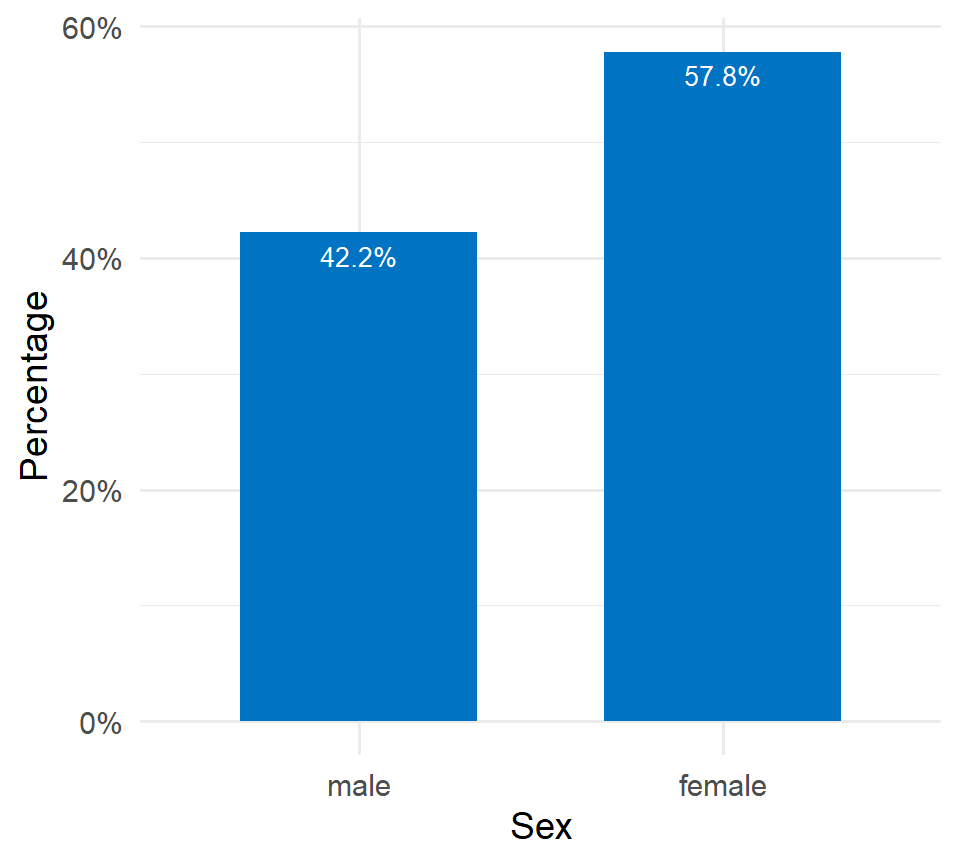

If the y-axis represents percentages (%), then each bar’s height corresponds to the percentage of participants in that category. For example, the percentage of female participants is 57.8%.

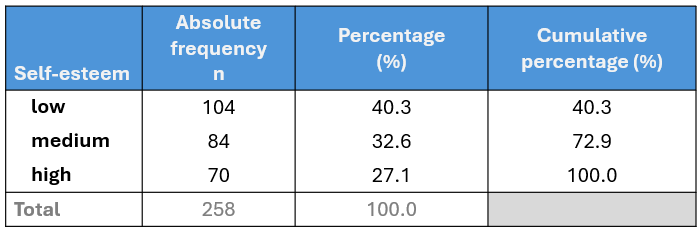

Score_cat variable (self-esteem)

Similarly, we can create the frequency table for the Score_cat (self-esteem) variable:

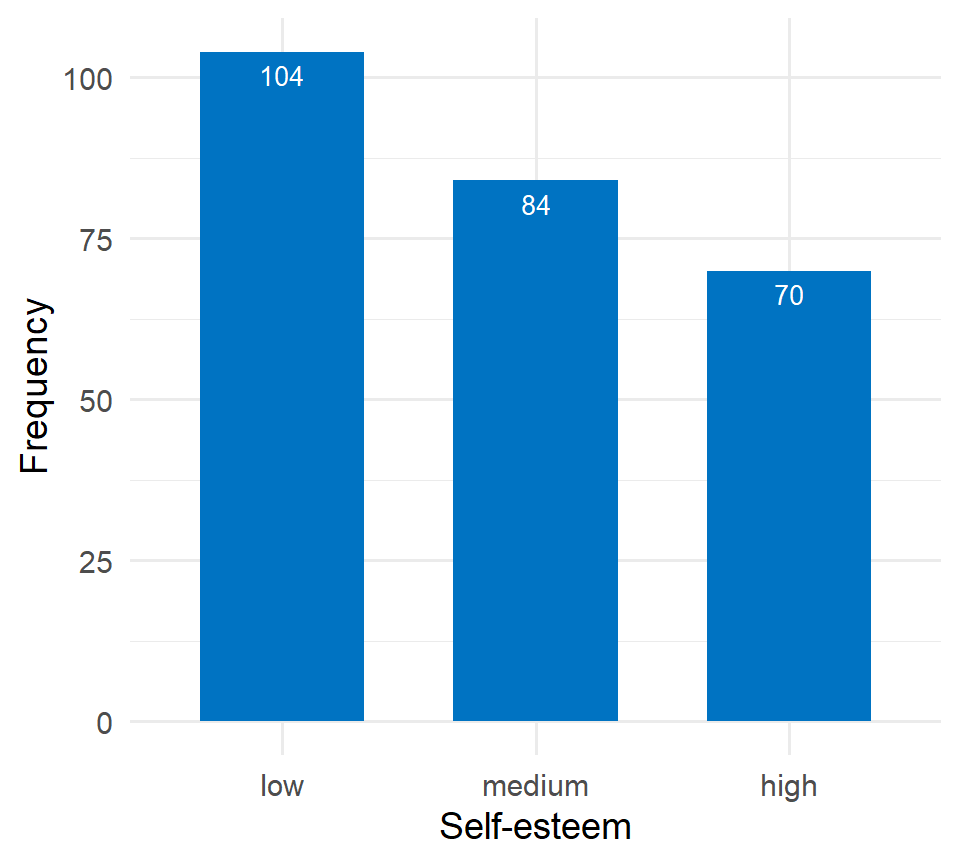

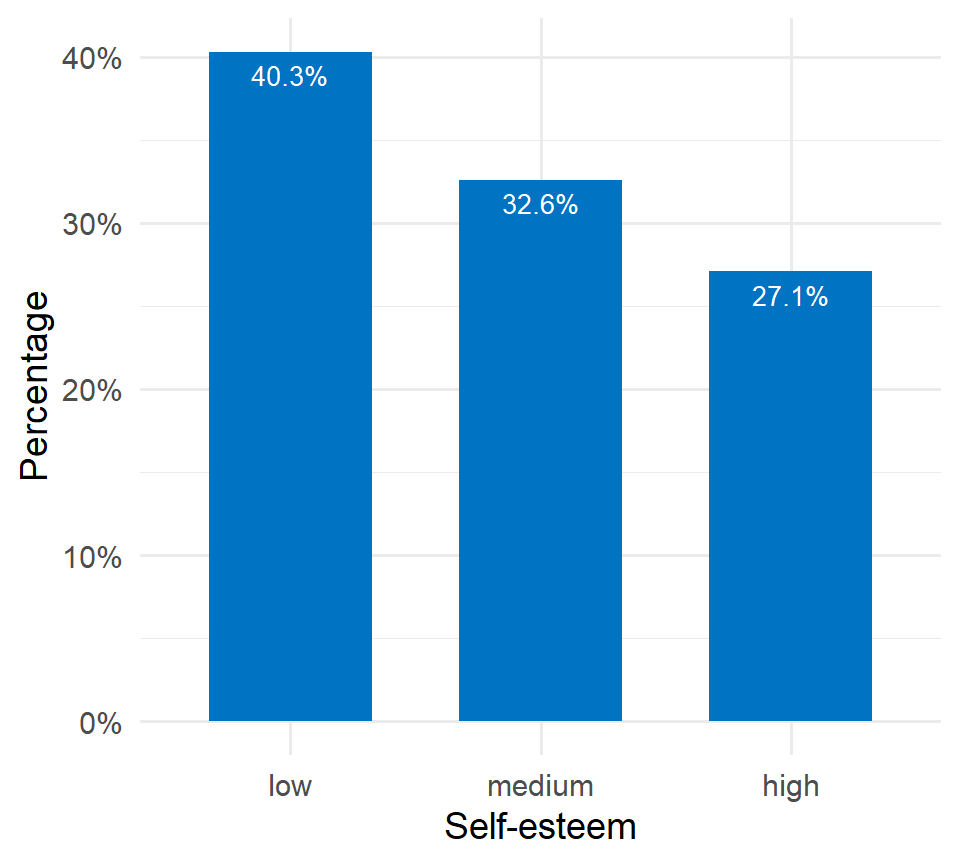

In the above table, we observe that 40.3% (104 out of 258) of participants have a low level of self-esteem. When we combine the low and medium categories, the cumulative percentage is: 40.3% + 32.6% = 72.9%. Finally, for all categories (low, medium, high), the cumulative percentage sums to 72.9% + 27.1% = 100%.

Figure 2.5 illustrates the frequency distribution of self-esteem. The horizontal axis (x-axis) displays the different self-esteem categories, ordered according to increasing self-esteem levels, while the vertical axis (y-axis) shows the frequency of each category.

Figure 2.6 illustrates the distribution of self-esteem using percentages. Most participants fall into the category of low self-esteem, accounting for 40.3% (172 out of 428), highlighting a significant portion of the sample that may benefit from targeted interventions or support.

- All bars should have equal width and equal spacing between them.

- The height of each bar should correspond to the data it represents.

- The bars should be plotted against a common zero-valued baseline.

2.2.2 Two variable tables (Contingency tables) and plots

A. Frequency contingency table

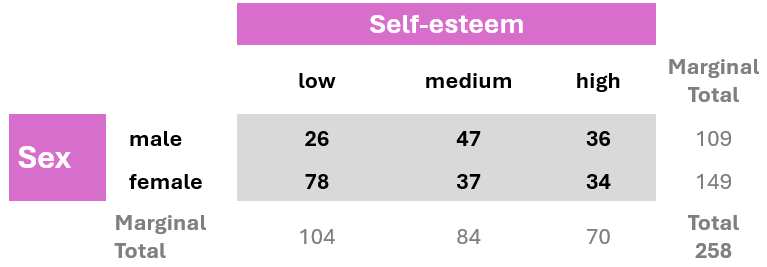

In addition to tabulating each variable separately, we might also be interested in exploring the association between two categorical variables. In this case, the resulting frequency table is a cross-tabulation, where each combination of levels from both variables is displayed. This type of table is called a contingency table because it shows the frequency of each category in one variable (e.g., sex), contingent upon the specific levels of the other variable (e.g., self-esteem), as shown in Figure 2.7.

Note: The table also typically includes row and column totals, also known as marginal totals, that sum the counts for each row and column, respectively.

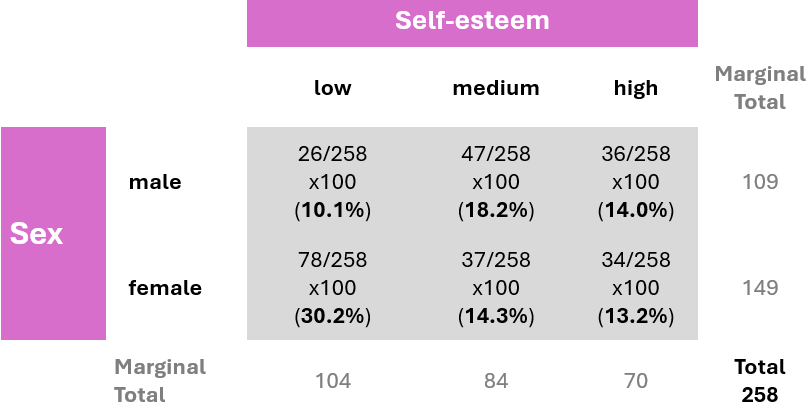

B. Joint distribution contingency table

A joint distribution contingency table displays both the frequency of observations across categories of two variables and the percentage distributions of those frequencies. The percentages are calculated by dividing the frequency in each cell by the overall total (258), then multiplying the result by 100. This shows the percentage of the total observations that fall into each category combination.

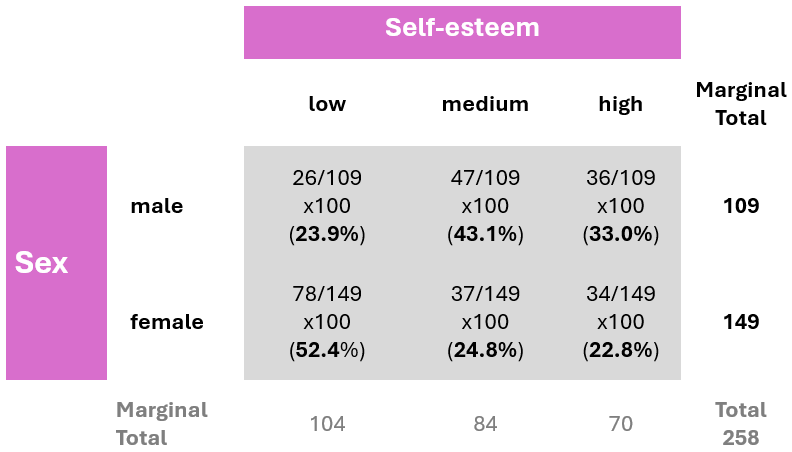

C. Conditional distribution contingency table

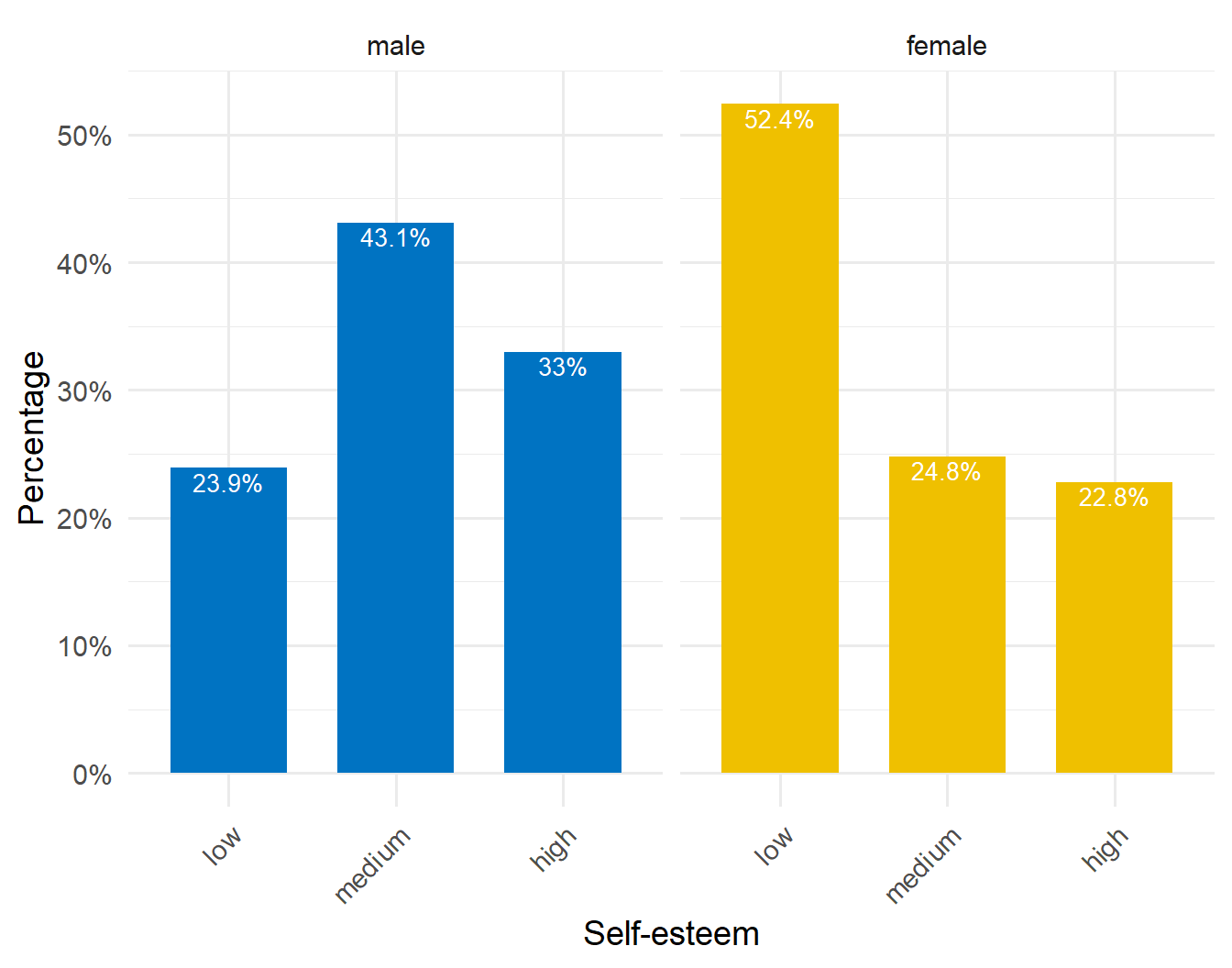

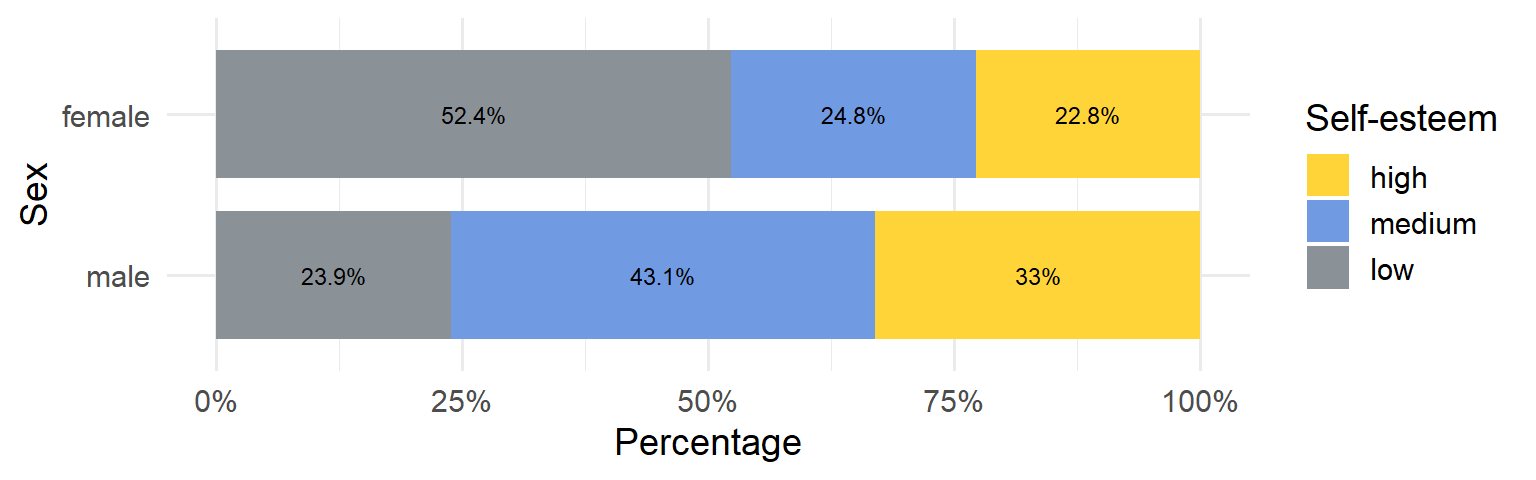

Suppose we are interested in the distribution of self-esteem levels within each sex group, meaning we are observing how self-esteem vary among males and among females. By conditioning on sex, we divide each cell’s frequency by the corresponding row total (row marginal total), rather than the overall total. This method allows us to examine the conditional distribution of self-esteem within each sex group. For example, the percentage of participants with low self-esteem, given that the participant is female, is calculated as (78/149) x 100 ≈ 52.4%.

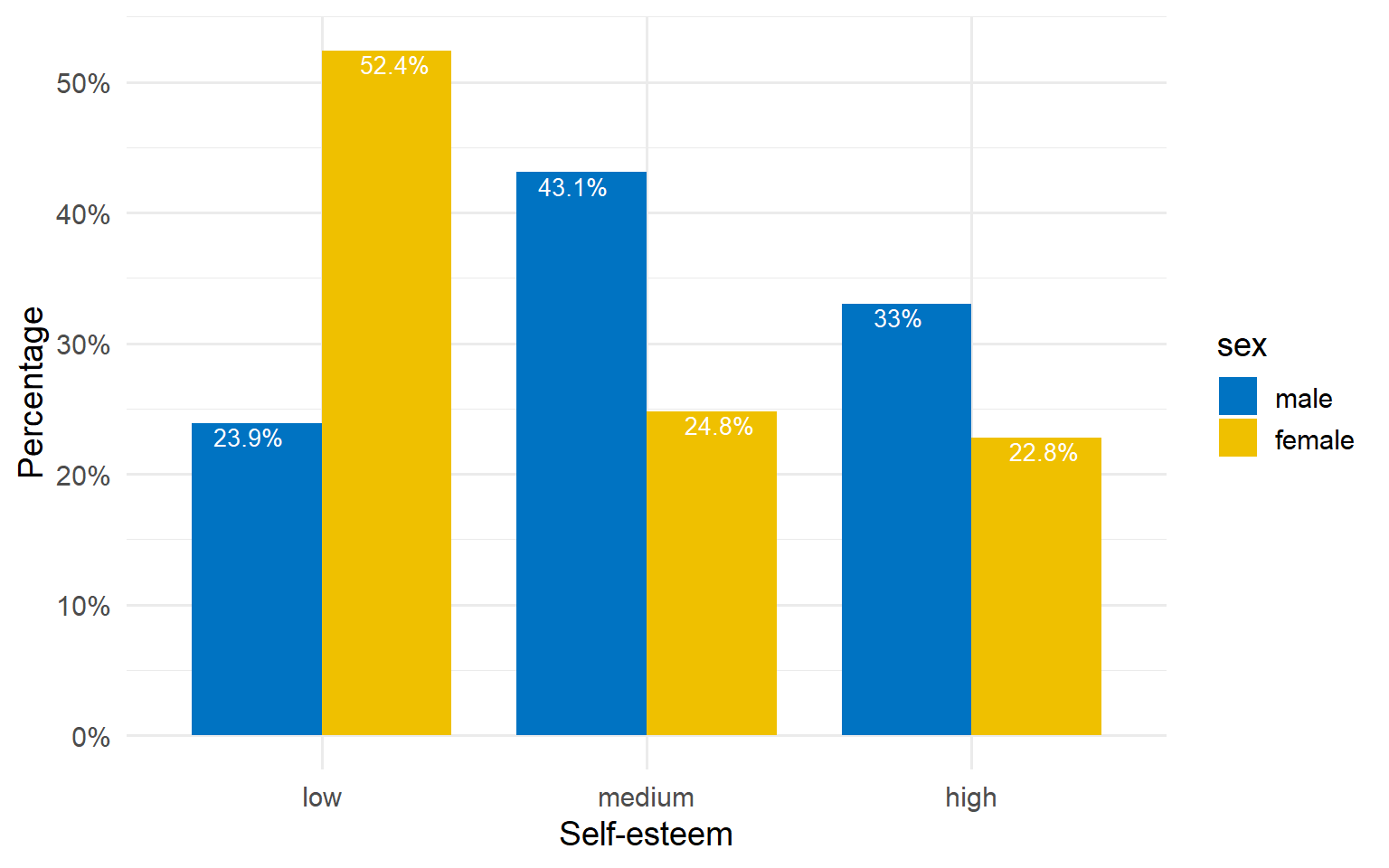

This data analysis indicates notable differences in self-esteem levels between male and female participants. Specifically, the percentage of female participants with low self-esteem (52.4%) is substantially greater than that of male participants (23.9%).

We can also graphically present the data in the table shown in Figure 2.9. A side-by-side bar plot (Figure 2.10) or, preferably, a grouped bar plot (Figure 2.11) can facilitate easier visual comparisons.

Alternatively, we can create a stacked bar plot, where the bars are segmented by self-esteem levels. Figure 2.12 illustrates a 100% stacked bar plot, which displays the percentage of each self-esteem level (low, medium, high) among male and female participants, emphasizing the relative differences within each group. For example, the plot shows that a higher proportion of females have low self-esteem (52.4%) compared to males (23.9%), while males have a higher proportion of medium (43.1%) and high self-esteem (33%) compared to females.

One consideration when using stacked bar plots is the number of variable levels: with many categories, stacked bar plots can become confusing.

2.3 Summarizing numerical data (Summary Statistics)

Summary measures are single numerical values that summarize a set of data. Numeric data can be described using two main types of summary measures (@tbl-measures).

Measures of central location: These describe the “center” of the data distribution. Common examples include the mean, median, and mode.

Measures of dispersion: These quantify the spread of values around the central value. Examples include the range, interquartile range (IQR), variance, and standard deviation.

| Measures of Central Location | Measures of Dispersion |

|---|---|

| • Mean | • Variance |

| • Median | • Standard Deviation |

| • Mode | • Range (Minimum, Maximum) |

| • Interquartile Range (1st and 3rd Quartiles) |

Additionally, measures of shape such as the sample coefficients of skewness and kurtosis provide further insights by revealing the overall shape and characteristics of the distribution.

2.3.1 Measures of central location

A. Sample Mean or Average

The arithmetic mean, or average, denoted as

Example

We subset the data to a smaller sample to make it easier to manually calculate the summary measures. As an example, we will use the number of hours per day spent on the internet by 15-year-old females with low self-esteem, which are as follows:

To calculate the mean, we use the Equation 2.1:

To highlight how outliers affect the mean, suppose we add a value of 24 to the dataset. This new data point is considered an outlier, as it is substantially higher than the other values in the dataset. Since 24 hours is the maximum possible in a single day, this extreme value clearly stands out from the rest of the observations.

Our new dataset becomes:

We can determine the new mean,

After adding this outlier, the mean increased from 6.6 to 9.5. This significant rise of 2.9 hours illustrates how outliers can distort the average, making it less representative of the dataset.

Advantages of mean

- It uses all the data values in the calculation and is the balance point of the data.

- It is algebraically defined and thus mathematically manageable.

Disadvantages of mean

- It is highly influenced by the presence of outliers—values that are abnormally high or low—making it a non-resistant summary measure.

- It cannot be easily determined by simply inspecting the data and is usually not equal to any of the individual values in the sample.

B. Median of the sample

The sample median, denoted as md, is an alternative measure of location that is less sensitive to outliers than mean.

The median is calculated by first sorting the observed values (i.e. arranging them in an ascending or descending order) and selecting the middle one. If the number of observations is odd, the median corresponds to the number in the middle of the sorted values. If the number of observations is even, the median is the average of the two middle numbers.

Example

First, we sort the observed values from smallest to largest:

Observed values:

Sorted values:

The number of observations is 5, which is an odd number; therefore, the median corresponds to the value in the middle of the sorted data:

Now let’s examine how the median responds to an outlier by adding the value of 24 to the data. In this case, the number of observations becomes 6, which is an even number. The new median,

We observe that the median is not strongly influenced by the addition of the outlier, as it only increased from 5 to 6.5. This demonstrates that the median is more resistant to outliers compared to the mean.

Advantages of median

- It is resistant to extreme values (outliers) compared to the mean.

Disadvantages of median

- It ignores the actual values of the data points, potentially losing some information about the data.

C. Mode of the sample

Another measure of location is the mode of the sample.

Mode represents the value that occurs most frequently in a set of data values.

Example

In our example, the value of 4 appears twice in the data:

Therefore, the mode is:

It’s important to note that some datasets may not have a mode if each value occurs only once. For example, if we replace one of the fours with a three:

However, if we replace the 12 with an 8, the dataset becomes:

Here, both 4 and 8 appear twice, making the dataset bimodal, meaning it has two modes.

2.3.2 Measures of dispersion

A. Range of the sample

The range is the difference between the maximum and minimum values in a dataset.

The minimum (Min) value represents the lowest value observed in a dataset, while the maximum (Max) value represents the highest value. These values provide valuable insights into the range and potential outliers within the dataset.

Example

Let’s determine the range for the sorted data in our example:

The minimum is Min = 4 hours, and the maximum is Max = 12 hours. Therefore, according to Equation 2.2:

Let’s add the extreme value of 24 to the data.

In this case, the range becomes:

The main disadvantages of the range as a measure of dispersion are its sensitivity to outliers and the fact that it uses only the extreme values, ignoring all other data points.

B. Inter-quartile range of the sample

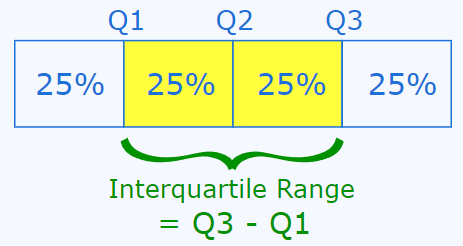

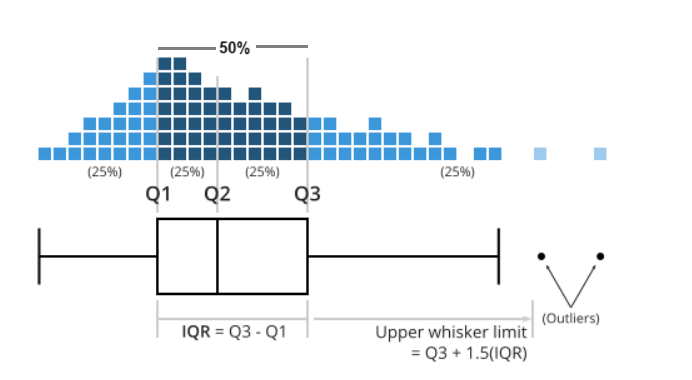

In the presence of outliers, the interquartile range (IQR) can provide a more accurate measure of the spread of the majority of the data. Before we define the interquartile range (IQR), let’s first clarify some basic concepts, specifically quantiles and quartiles.

A quantile indicates the value below which a certain proportion of the data falls. The most commonly used quantiles are known as quartiles:

Interquartile range is the difference between the third quartile (or upper quartile) and the first quartile (or lower quartile) in an ordered data set.

Therefore, the IQR focuses on the middle 50% of the dataset.

It’s important to note that different statistical software packages may produce slightly different quartiles and interquartile ranges (IQRs) for the same dataset, especially when there are only a few values present. This discrepancy is due to the numerous definitions of sample quantiles used in statistical software packages (Hyndman and Fan 1996). While discussing these differences is beyond the scope of this introductory course, we will focus on the results provided by Jamovi.

Example

In our example, the first quartile is

Therefore, the inter-quartile range is:

After adding an extreme value such as 24, our example sorted dataset is as follows:

We would expect

As with the range, greater variability in the data typically leads to a larger IQR. However, unlike the range, the IQR is resistant to outliers, as it is not influenced by observations below the first quartile or above the third quartile.

C. Sample variance

Sample variance, denoted as

Mathematically, the sample variance,

Example

The original values are:

and we have calculated the mean,

Thus, according to Equation 2.4:

Now let’s examine how the variance responds to an outlier by adding the value of 24 to the data. The new mean is

The variance is sensitive to outliers because it is based on the squared deviations from the mean. As a result, even a single extreme value can significantly increase the variance, making it a less reliable measure of spread when outliers are present.

The variance, being expressed in square units, is not the preferred metric for describing the variability of data.

D. Standard deviation of the sample

Standard deviation is one of the most common measures of spread and is particularly useful for assessing how far the data points are distributed from the mean.

Standard deviation, denoted as s or sd, represents the typical distance of observations from the mean. It is calculated as the square root of the sample variance.

Example

The standard deviation is:

and is expressed in the same units as the original data values.

With the addition of the value 25, the standard deviation becomes:

The standard deviation uses all observations in a dataset for its calculation and is expressed in the same units as the original data. However, it is sensitive to outliers, which can substantially influence its value.

2.3.3 Plots for continuous variables

When visualizing continuous data, several types of plots can be used to understand the distribution, spread, and overall patterns in the data.

In the following examples, we will use the complete dataset consisting of 258 observations.

A. Frequency histogram

The most common way to present the frequency distribution of numerical data, especially when there are many observations, is through a histogram. Histograms visualize the data distribution as a series of bars without gaps between them (unless a particular bin has zero frequency), in contrast to bar plots. Each bar typically represents a range of numeric values known as a bin (or class), with the height of the bar indicating the frequency of observations (counts) within that particular bin. Below are the frequency histograms for age and time_spent:

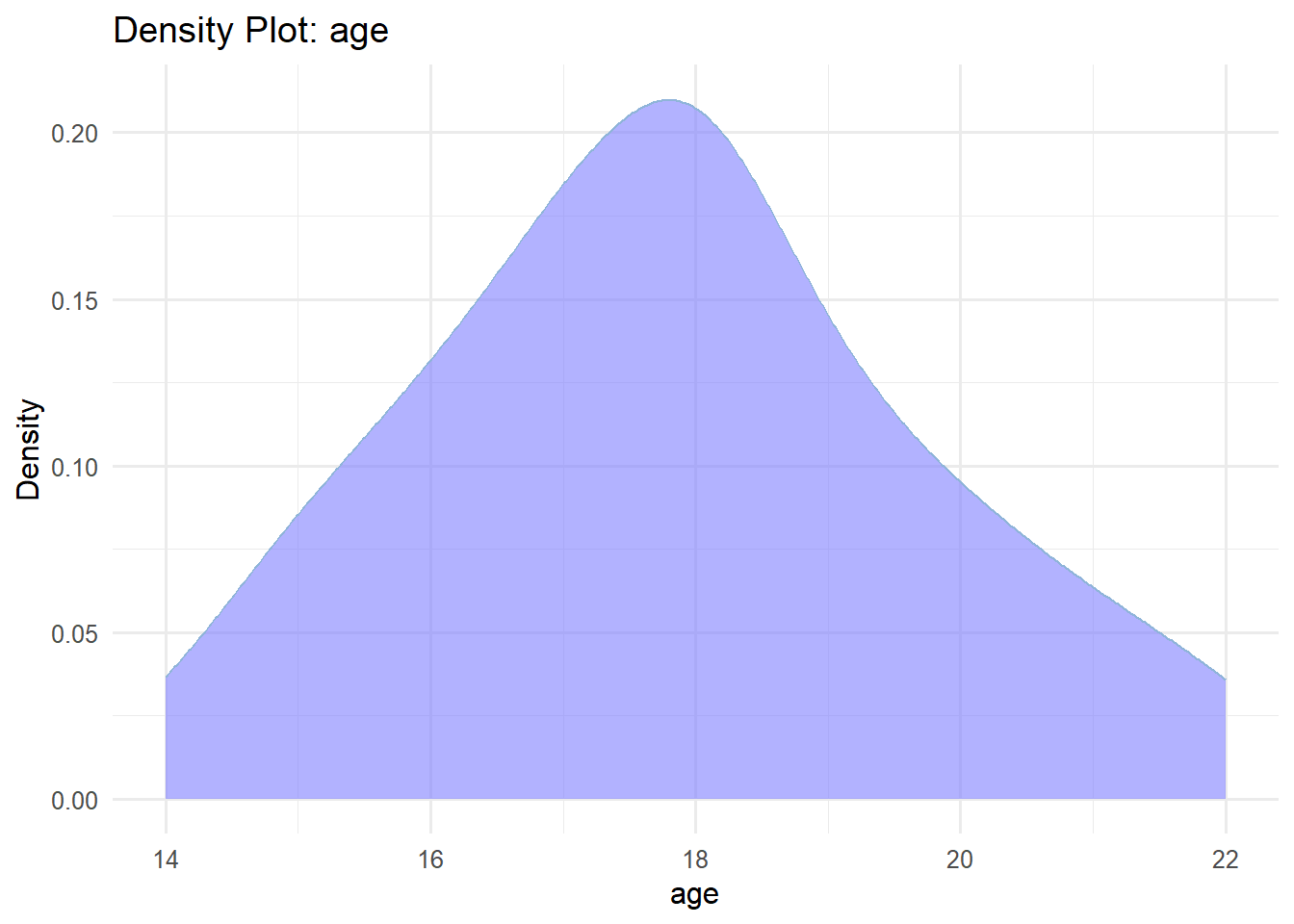

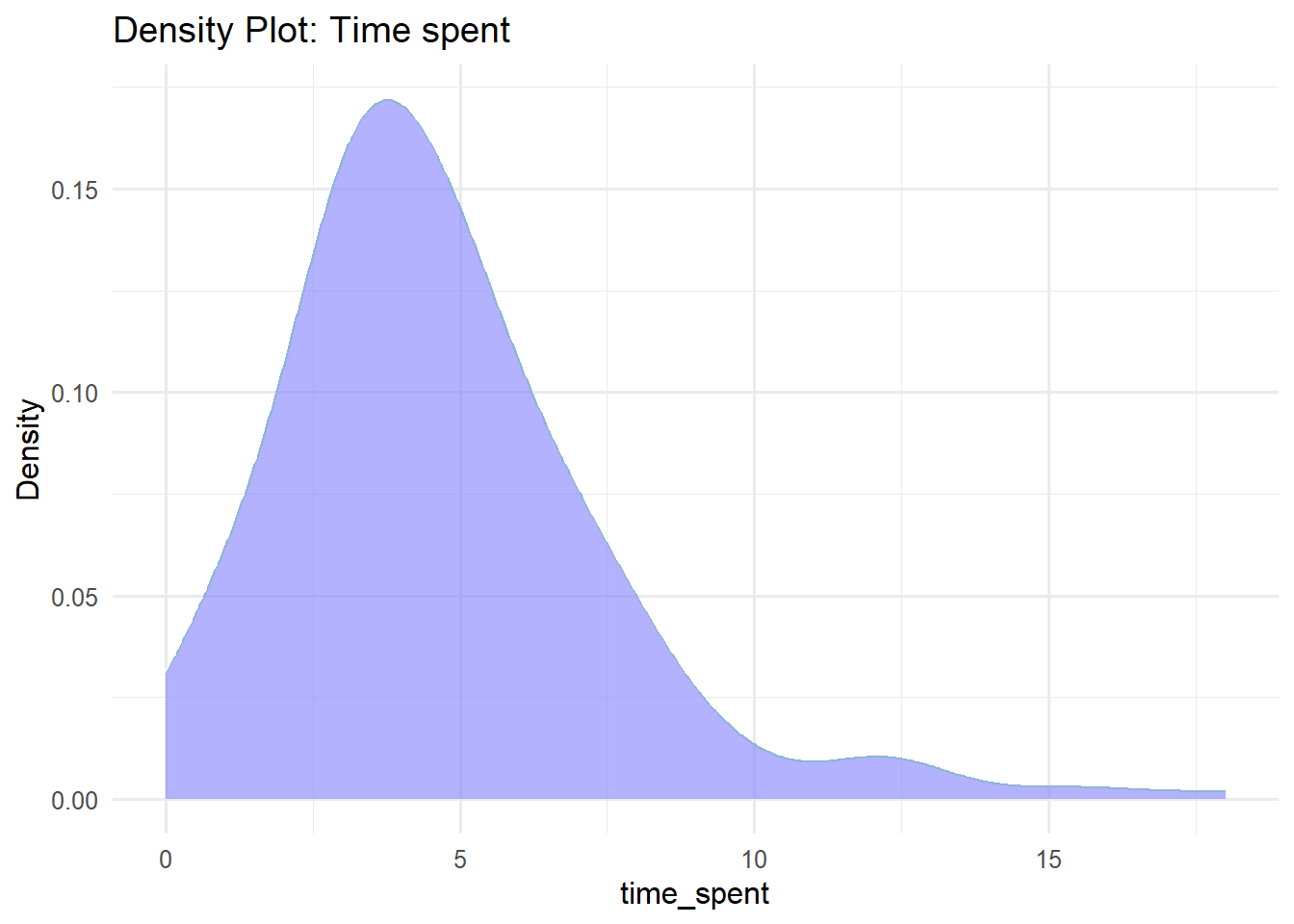

In Figure 2.14(a), the age distribution exhibits a symmetrical bell-shaped form, with the highest frequency occurring around 18 years old. The participants’ ages range approximately from 14 to 22 years. In Figure 2.14(b), the time_spent variable follows a right-skewed distribution, with a higher frequency occurring around 3 hours and a range roughly from 0 to 18 hours.

To summarize, a histogram provides information on:

The distribution of the data, whether it’s symmetrical or asymmetrical, and the presence of any outliers.

The location of the peak(s) in the distribution.

The degree of variability within the data, indicating the spread and range covered by the data.

B. Density plot

A density plot is another way to represent the distribution of numerical data, often seen as a smoother version of a histogram (Figure 2.15). Moreover, density curves are typically scaled so that the area under the curve equals one.

C. Box Plots

Box plots are useful for visualizing the central tendency and spread of continuous data, particularly when comparing distributions across multiple groups.

This type of graph uses boxes and lines to represent the distributions. In Figure 2.16 the box boundaries indicate the interquartile range (IQR), covering the middle 50% of the data, with a horizontal line inside the box representing the median. Whiskers extend from the box to capture the range of the remaining data, providing additional insight into the spread. Data points lying outside the whiskers are displayed as individual dots and are considered potential outliers.